Introduction

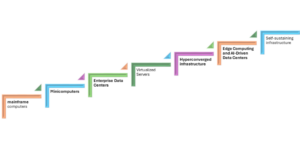

A Short Story: The Evolution of Data Centers

It was a quiet evening in 1965 when James, an IT engineer at a major corporation, walked into a large, dimly lit room filled with humming mainframes. His job was to ensure that these massive machines, with their flashing lights and spinning reels, operated without failure. The data center, as they called it, was the heart of the company’s operations. Over the years, James witnessed remarkable changes—from bulky mainframes to sleek, efficient servers and, eventually, to cutting-edge hyperconverged systems. Decades later in 2025, his grandson, Alex, now works in an AI-powered data center, where most operations are automated, optimized, and more energy-efficient than ever before. This evolution of data centers has not only transformed businesses but also shaped the digital world as we know it.

On-premises data centers have played a critical role in IT infrastructure for decades, powering businesses, governments, and research institutions. While cloud computing has gained popularity, on-premises data centers continue to be indispensable for organizations requiring control, security, and performance. The evolution of on-premises data centers spans several decades, marked by technological breakthroughs, increased efficiency, and innovative solutions. This blog outlines the major advancements in the timeline of on-premises data centers.

1950s-1960s: The Birth of Data Centers

Mainframe Computing Era

The origins of on-premises data centers trace back to the 1950s with the introduction of mainframe computers by companies like IBM, starting with the IBM 701 in 1952. These early data centers were massive rooms filled with vacuum tube computers that required extensive cooling and power supply. The 1960s marked a significant transition to transistor-based mainframes, which improved processing power while reducing heat generation. During this period, the introduction of punched card systems enhanced data processing and storage, laying the foundation for modern computing. Additionally, cooling techniques evolved from basic fans to liquid cooling systems, ensuring better operational stability.

1970s: The Rise of Minicomputers and Server Rooms

Emergence of Minicomputers

The introduction of minicomputers, such as the DEC PDP-11, enabled organizations to have smaller and more affordable computing units. This led companies to establish dedicated server rooms within their premises, marking the early evolution of on-premises data centers. Advancements in storage technology, including magnetic tape and disk storage, improved data retention and retrieval. Additionally, early forms of database management systems (DBMS) were introduced, enhancing data organization and accessibility. Networking standards like Ethernet, developed in the mid-1970s, further laid the foundation for future advancements in data communication.

1980s: The Personal Computer Revolution and Networking

Networking and Early Data Center Concepts

The rise of personal computers (PCs) and local area networks (LANs) transformed the IT infrastructure landscape, enabling more interconnected and efficient computing environments. Client-server architecture emerged, allowing companies to host applications and databases on dedicated servers. To enhance reliability, data centers began incorporating Uninterruptible Power Supplies (UPS) and backup generators. In 1988, IBM introduced the AS/400 system, providing businesses with a robust computing platform. The adoption of fibre optics in networking significantly improved data transmission speeds and reliability. Additionally, redundant power supply systems and early load-balancing techniques enhanced server uptime and operational efficiency.

1990s: Standardization and Scalability

Enterprise Data Centers Take Shape

The 1990s saw the standardization of IT infrastructure with the adoption of rack-mounted servers, structured cabling, and improved cooling systems. Companies like Dell, HP, and IBM began offering standardized server hardware, making it easier for businesses to scale their IT operations. Virtual Private Networks (VPNs) and Wide Area Networks (WANs) enabled remote access to on-premises data centers, enhancing connectivity. The introduction of RAID (Redundant Array of Independent Disks) technology improved data redundancy and fault tolerance. During this period, the first data center colocation facilities emerged, allowing businesses to share data center space for cost efficiency. Additionally, enhanced fire suppression systems, such as gas-based extinguishers, were implemented to reduce the risk of fire damage.

2000s: Virtualization and Automation

The Era of Virtualization

The early 2000s marked the rise of server virtualization, led by VMware, enabling multiple virtual machines (VMs) to run on a single physical server. Blade servers gained popularity, optimizing space and energy efficiency in data centers. Automation tools were increasingly implemented to streamline server provisioning, backup, and security updates. Disaster recovery solutions and high-availability architectures became essential for ensuring business continuity. Additionally, the introduction of Storage Area Networks (SANs) and Network Attached Storage (NAS) revolutionized data storage and management. Advanced monitoring systems were also deployed to optimize server health and energy consumption, enhancing overall data center efficiency.

2010s: The Hyperconverged Infrastructure Revolution

Hyperconvergence and Software-Defined Solutions

The emergence of hyperconverged infrastructure (HCI) integrated computing, storage, and networking into a single system, streamlining data center operations. Software-defined networking (SDN) and software-defined storage (SDS) revolutionized data center management by enhancing flexibility and scalability. Cooling technologies have seen significant improvements with the adoption of liquid cooling and airflow optimization techniques. As cybersecurity threats grew, stronger security protocols, including micro-segmentation and zero-trust architecture, were implemented to safeguard data. The introduction of containerization technologies like Docker and Kubernetes enabled applications to be deployed in portable, scalable environments. Additionally, high-performance computing (HPC) and GPU-accelerated servers unlocked new possibilities for AI, data analytics, and research applications, further advancing computational capabilities.

2020s: Edge Computing and AI-Driven Data Centers

Modern Innovations in On-Premises Data Centers

Modern Innovations in On-Premises Data Centers Edge computing is becoming a crucial component of modern on-premises data centers, reducing latency and enhancing real-time processing capabilities. AI-driven automation and predictive analytics are optimizing workload distribution and improving energy efficiency. The demand for sustainable data centers is increasing, with a focus on energy-efficient hardware and the adoption of renewable energy sources. Hybrid cloud solutions are bridging the gap between on-premises and cloud environments, providing greater flexibility and cost-effectiveness. Advanced security solutions, including AI-driven threat detection and response, are strengthening data protection measures. Additionally, ongoing research in quantum computing is beginning to influence data center strategies, with the potential to reshape computing in the coming decades.

2050: The Future of On-Premises Data Centers

A Glimpse into the Future

By 2050, on-premises data centers will be fully autonomous, leveraging AI and quantum computing for ultra-fast data processing. Modular, self-healing infrastructure will enable real-time hardware and software adjustments, ensuring zero downtime. Advanced nanotechnology-based cooling systems will significantly reduce energy consumption and enhance heat dissipation. Traditional server management consoles will be replaced by holographic and immersive interfaces, allowing real-time monitoring through augmented reality. Data centers will become entirely environmentally friendly, utilizing biodegradable and sustainable hardware components. Edge AI and distributed computing will facilitate seamless real-time data processing, eliminating latency concerns. Additionally, quantum encryption and AI-driven cybersecurity will provide near-impenetrable protection against cyber threats, ensuring unparalleled data security.

The evolution of on-premises data centers reflects the rapid advancements in computing technology, from mainframes to AI-driven systems. As we move forward, edge computing, AI, and sustainability will shape the future, ensuring that on-premises data centers remain a crucial part of IT infrastructure. With ongoing advancements, the future promises a new era of intelligent, self-sustaining data centers. What once seemed like science fiction shown in Terminator & Iron Man—glowing cables, AI-powered automation, holographic interfaces, and quantum security—is becoming reality. The visions from futuristic movies are no longer just imagination; they are blueprints for the next generation of technological marvels. I am excited to see what grandchildren of Alex will manage.

If you need deep observability for your diversified data centers, reach out to Virtana here and we will be happy to assist you in making you successful.

About the Author

Meeta Lalwani is a director of product management professional leading the AIOps (Artificial Intelligence for IT Operations) and GenAI Portfolio for the SaaS (Software as a Service) platform at Virtana. She is passionate about modern technologies and their potential to positively impact human growth.